dmp-af Configuration¶

Table of Contents¶

dbt model config options¶

Model's config is an entrypoint to finetune the behavior of the model. It can be used to define the following options:

schedule¶

Tag to define the schedule of the model. Supported tags are:

- @monthly model will be run once a month on the first day of the month at midnight (equals to

0 0 1 * *cron schedule) - @weekly model will be run once a week on Sunday at midnight (equals to

0 0 * * 0cron schedule) - @daily model will be run once a day at midnight (equals to

0 0 * * *cron schedule) - @hourly model will be run once an hour at the beginning of the hour (equals to

0 * * * *cron schedule) - @every15minutes model will be run every 15 minutes (equals to

*/15 * * * *cron schedule) - @manual - special tag to mark the model has no schedule. Read more about it in tutorial

schedule_shift (str)¶

Shift the schedule of the model for N units. Unit is parameterized by schedule_shift_unit.

Resulted airflow DAG will have name <domain>_<schedule>_shift_<schedule_shift>_<schedule_shift_unit>s.

schedule_shift_unit (str)¶

Schedule shift unit. Supported units are:

- minute

- hour

- day

- week

dependencies (dict[str, DependencyConfig])¶

Сonfig to define how the model depends on other models. You can find the tutorial here

For each dependency, you can specify the following options:

skip(bool): if set toTrue, the upstream model will be skipped.wait_policy(WaitPolicy): policy how to build dependency. There are two options:last(default) – wait only for the last run of the upstream model taking into account the execution date of the DagRunall– wait for all runs of the upstream model between the execution interval. This is useful if upstream model's schedule is more frequent.

Example:

# dmn_jaffle_analytics.ods.orders.yml

models:

- name: dmn_jaffle_analytics.ods.orders

config:

dependencies:

dmn_jaffle_shop.stg.orders:

skip: True

dmn_jaffle_shop.stg.payments:

wait_policy: last

enable_from_dttm (str)¶

Date and time when the model should be enabled. The model will be skipped until this date. The format is YYYY-MM-DDTHH:MM:SS. Can be used in combination with disable_from_dttm

disable_from_dttm (str)¶

Date and time when the model should be disabled. The model will be skipped after this date. The format is YYYY-MM-DDTHH:MM:SS. Can be used in combination with enable_from_dttm

domain_start_date (str)¶

Date when the domain of the model starts. Option is used to reduce number of catchup DagRuns in Airflow. The format is YYYY-MM-DDTHH:MM:SS. Each domain selects minimal domain_start_date from all its models. Best place to set this option is dbt_project.yml file:

dbt_target (str)¶

Name of the dbt target to use for this exact model. If not set, the default target will be used. You can find more info in tutorial

env (dict[str, str])¶

Additional environment variables to pass to the runtime. All variable values are passed first to dbt jinja rendering and then to the airflow rendering.

Example:

# dmn_jaffle_analytics.ods.orders.yml

models:

- name: dmn_jaffle_analytics.ods.orders

config:

env:

MY_ENV_VAR: "my_value"

MY_ENV_WITH_AF_RENDERING: "{{'{{ var.value.get(\"my.var\", \"fallback\") }}'}}"

Note

To use airflow templates in the env values, you need to use double curly braces {{ '{{' }} and {{ '}}' }} to escape them.

Pattern: {{<airflow_template>}} → {{ '{{<airflow_template>' }}

py_cluster, sql_cluster, daily_sql_cluster, bf_cluster (str)¶

resolving dbt target is based on these targets if explicitly not set. Usually, these parameters are set in the dbt_project.yml file for different domains:

# dbt_project.yml

models:

project_name:

domain_name:

py_cluster: "py_cluster"

sql_cluster: "sql_cluster"

daily_sql_cluster: "daily_sql_cluster"

bf_cluster: "bf_cluster"

maintenance (DmpAfMaintenanceConfig)¶

Config to define maintenance tasks for the model.

You can find the tutorial here.

Example of configuration:

# dmn_jaffle_analytics.ods.orders.yml

models:

- name: dmn_jaffle_analytics.ods.orders

config:

ttl:

key: etl_updated_dttm

expiration_timeout: 10

tableau_refresh_tasks (list[TableauRefreshTaskConfig])¶

List of Tableau refresh tasks.

Configuration to define Tableau refresh tasks. This will trigger Tableau refresh tasks after the model is successfully run.

Note

To use this feature, you need to install dmp-af with tableau extra.

Example of configuration:

# dmn_jaffle_analytics.ods.orders.yml

models:

- name: dmn_jaffle_analytics.ods.orders

config:

tableau_refresh_tasks:

- resource_name: "extract_name"

project_name: "project_name"

resource_type: workbook # or datasource

dbt_run_model DAG¶

If you enable the parameter include_single_model_manual_dag in the Config, it will generate a separate DAG for your dbt project named <dbt_project_name>_dbt_run_model. This DAG is particularly useful for executing arbitrary dbt run commands in scenarios where you need to:

- Backfill data into specific models

- Manually run newly created models

- Re-run models after code changes for a larger time range

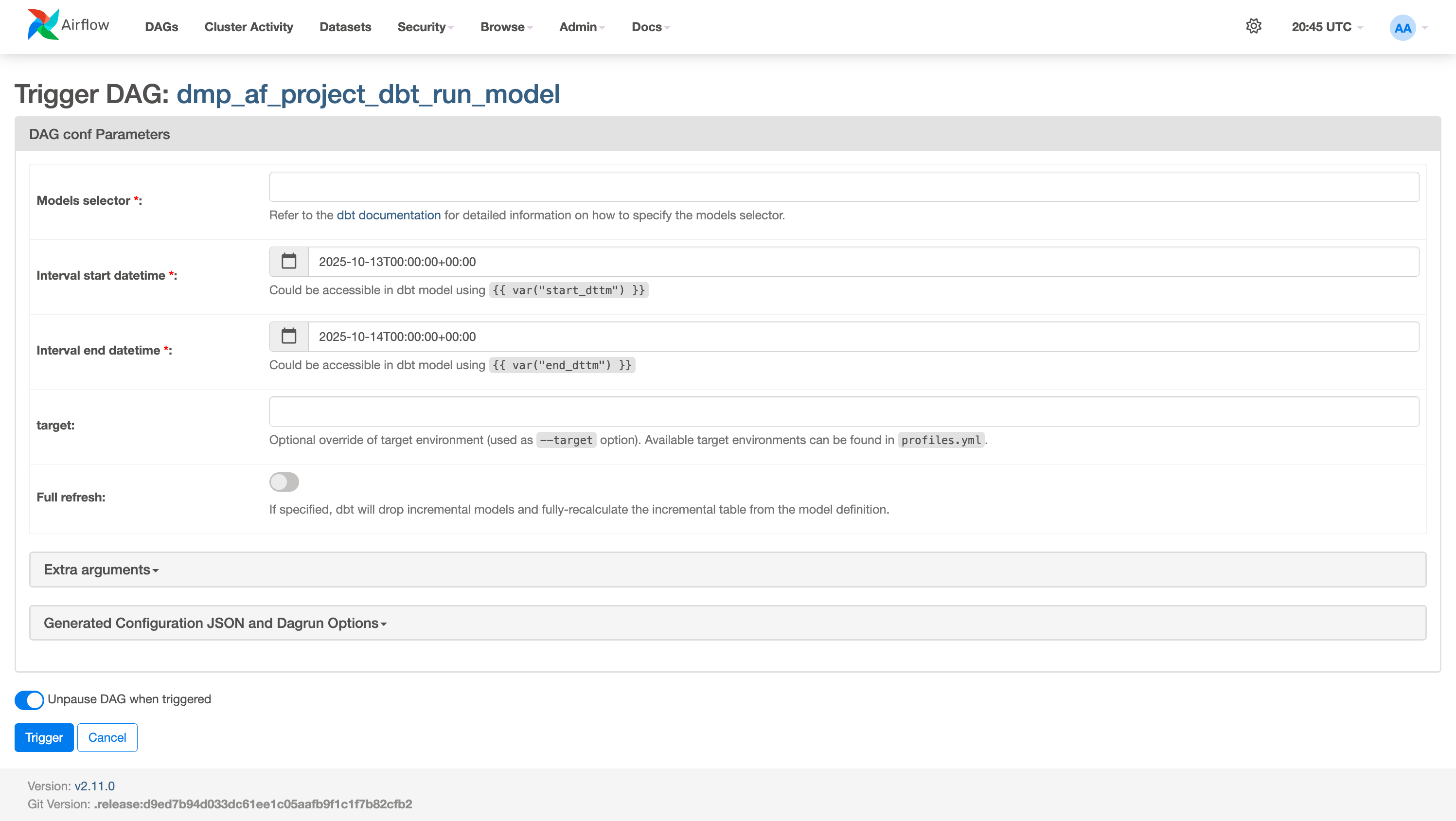

To use this functionality, navigate to the DAG and manually trigger it by pressing the "Play" button. Once triggered, you will be prompted to fill out an input form with all required parameters. Below is an illustration of the input form:

Available Input Parameters¶

-

Model Selector:

- Specify the model by its name (e.g.,

jaffle_shop.ods.orders). - For models that include special selection syntax (e.g.,

jaffle_shop.ods.orders+), refer to the dbt documentation on node selection syntax for more information.

- Specify the model by its name (e.g.,

-

Interval Start Datetime:

- The start datetime that will be passed to the model and can be accessed via

{{ var("start_dttm") }}.

- The start datetime that will be passed to the model and can be accessed via

-

Interval End Datetime:

- The end datetime that will be passed to the model and can be accessed via

{{ var("end_dttm") }}.

- The end datetime that will be passed to the model and can be accessed via

Note

For detailed information on how time intervals work in dmp-af, including automatic interval calculation for scheduled runs and manual triggers, see Time Interval Variables.

-

Target (optional):

- The target name to override the default target.

-

Full Refresh (optional):

- When set to

True, the model will be run with the--full-refreshflag.

- When set to

-

Extra Arguments:

- You can pass additional options and flags to the dbt command. To do this, provide them in the Extra arguments section using JSON format, as shown in the example below: